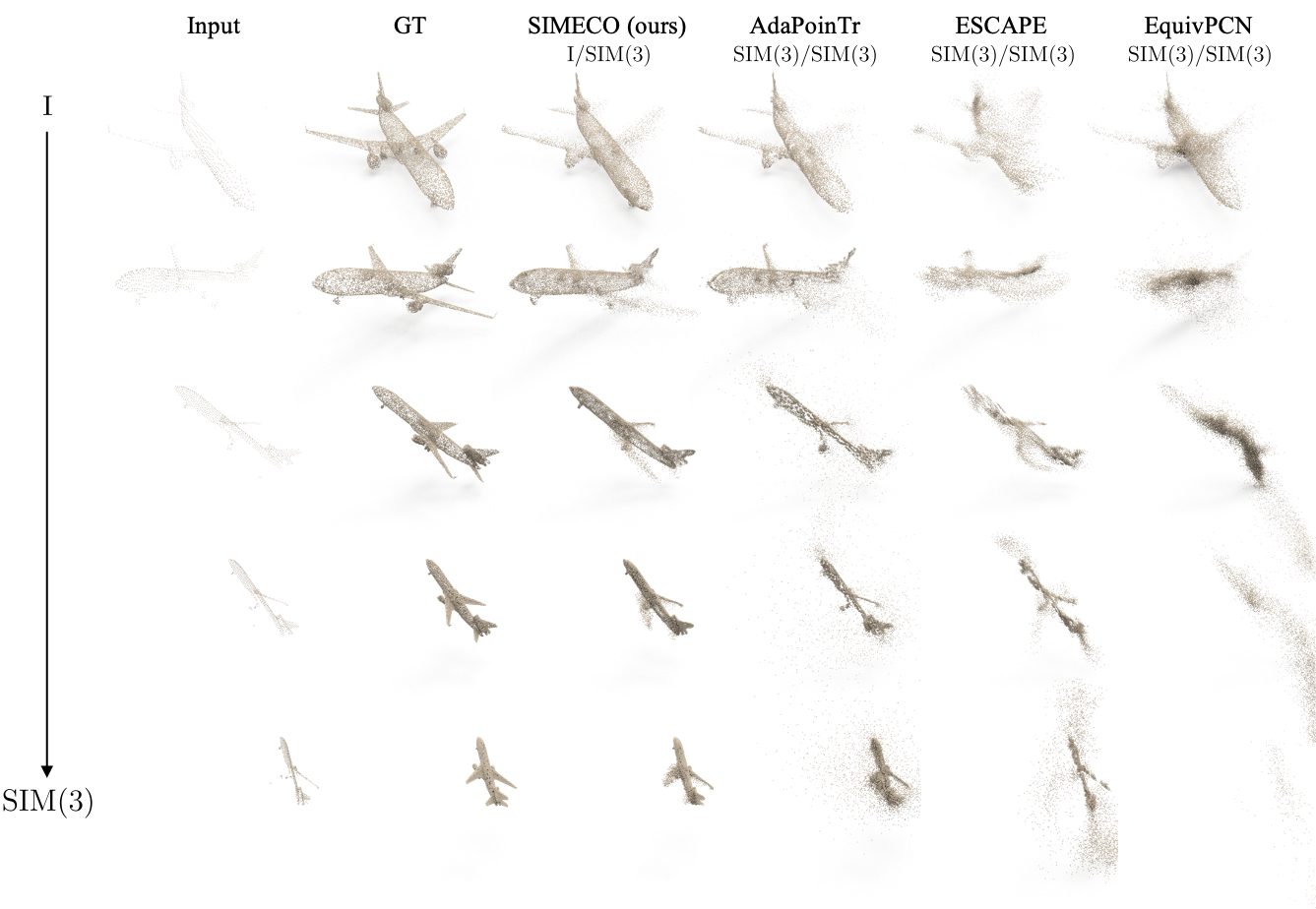

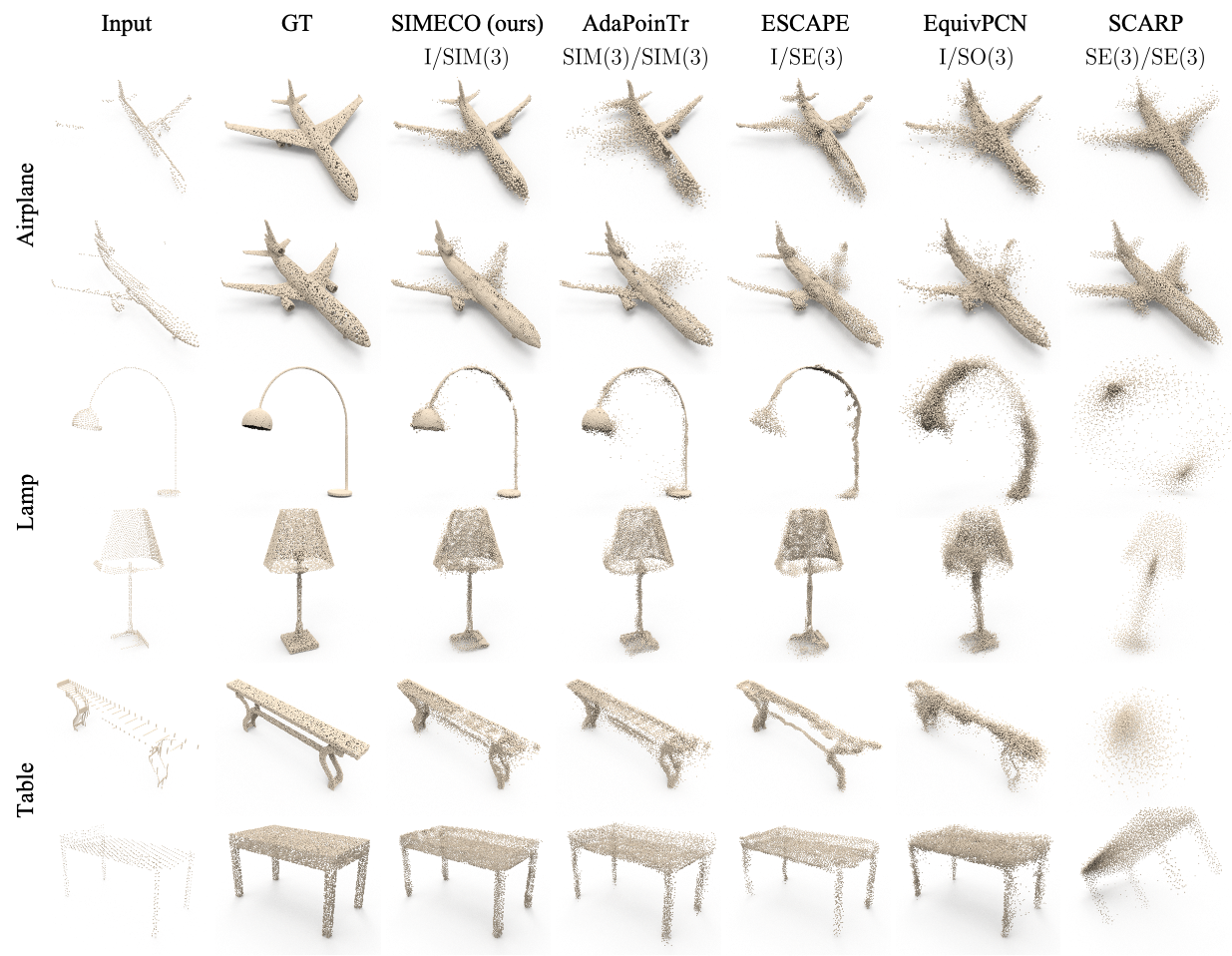

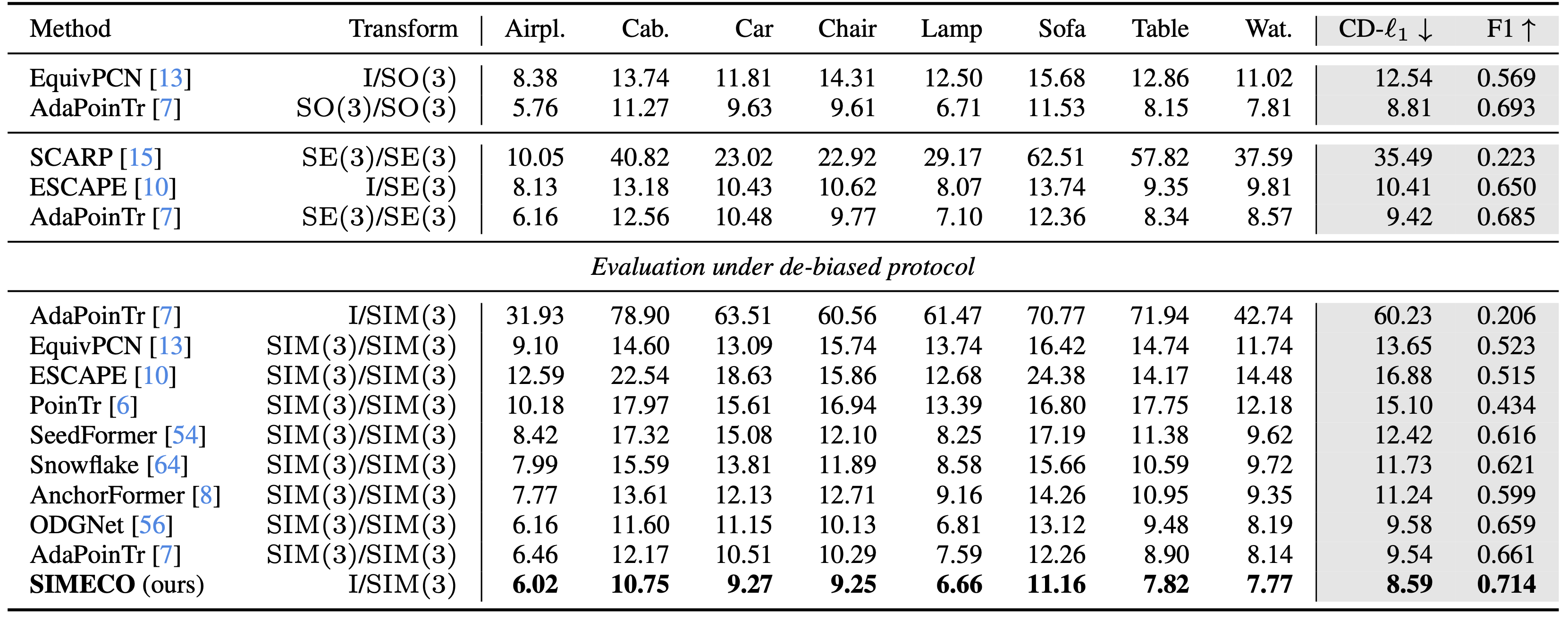

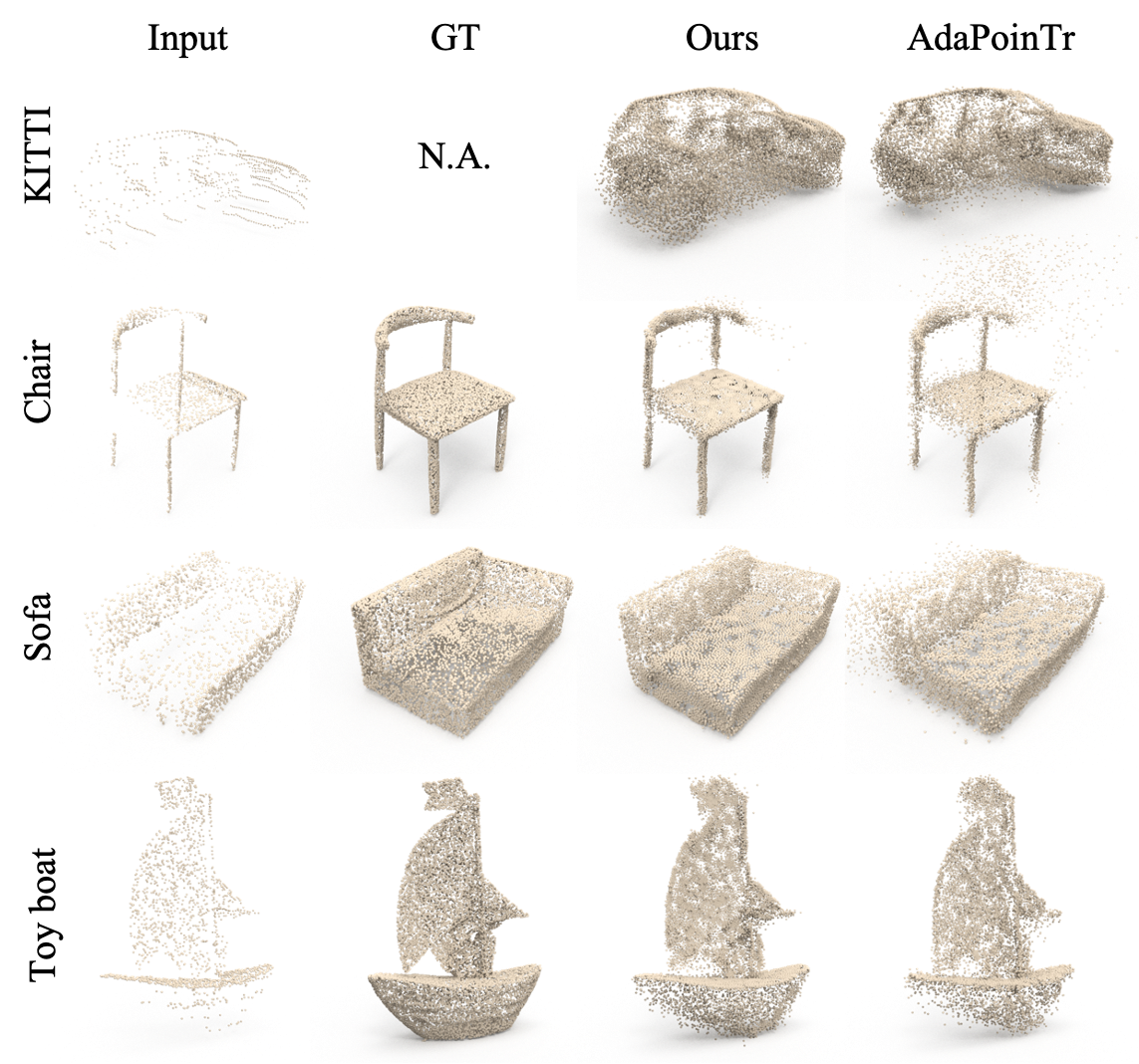

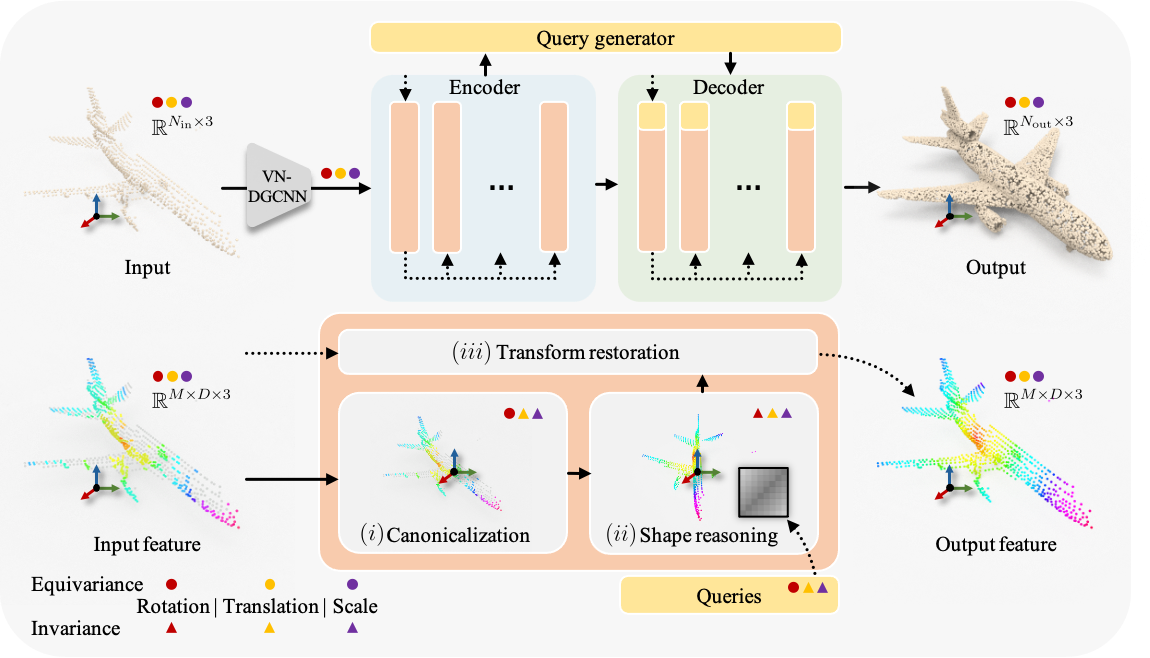

3D shape completion methods typically assume scans are pre-aligned to a canonical frame. This leaks pose and scale cues that networks may exploit to memorize absolute positions rather than inferring intrinsic geometry. When such alignment is absent in real data, performance collapses. We argue that robust generalization demands architectural equivariance to the similarity group, SIM(3), so the model remains agnostic to pose and scale. Following this principle, we introduce the first SIM(3)-equivariant shape completion network, whose modular layers successively canonicalize features, reason over similarity-invariant geometry, and restore the original frame. Under a de-biased evaluation protocol that removes the hidden cues, our model outperforms both equivariant and augmentation baselines on the PCN benchmark. It also sets new cross-domain records on real driving and indoor scans, lowering minimal matching distance on KITTI by 17% and Chamfer distance \(\ell_1\) on OmniObject3D by 14%. Perhaps surprisingly, ours under the stricter protocol still outperforms competitors under their biased settings. These results establish full SIM(3) equivariance as an effective route to truly generalizable shape completion.

@article{wang2025simeco,

title={Learning Generalizable Shape Completion with SIM(3) Equivariance},

author={Yuqing Wang and Zhaiyu Chen and Xiao Xiang Zhu},

journal={arXiv preprint arXiv:2509.26631},

year={2025}

}